如何在Python中实现梯度下降以寻找局部最小值

梯度下降是一种迭代算法,用于通过寻找最佳参数来最小化一个函数。梯度下降法可以应用于任何维度的函数,即一维、二维、三维。在这篇文章中,我们将致力于寻找抛物线函数(2-D)的全局最小值,并将在python中实现梯度下降,为线性回归方程(1-D)寻找最佳参数。在进入实施部分之前,让我们确认一下实施梯度下降算法所需的参数集。为了实现梯度下降算法,我们需要一个需要最小化的成本函数,迭代次数,确定每次迭代的步长的学习率,同时向最小值移动,在每次迭代中更新参数的权重和偏差的部分导数,以及一个预测函数。

到现在为止,我们已经看到了梯度下降所需的参数。现在让我们把这些参数与梯度下降算法对应起来,并通过一个例子来更好地理解梯度下降。让我们考虑一个抛物线方程y=4x 2。通过观察这个方程,我们可以发现抛物线函数在x=0处是最小的,即在x=0处,y=0。因此x=0是抛物线函数y=4x 2的局部最小值。现在让我们看看梯度下降的算法,以及我们如何通过应用梯度下降获得局部最小值。

梯度下降的算法。

应在当前点按与函数梯度的负数成比例的步骤(远离梯度)来寻找局部最小值。梯度上升是指通过与梯度的正值成比例的步骤(向梯度移动)来接近函数的局部最大值。

repeat until convergence

{

w = w - (learning_rate * (dJ/dw))

b = b - (learning_rate * (dJ/db))

}

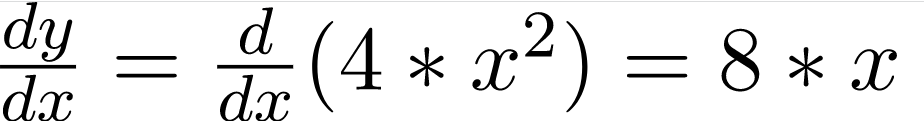

第1步:初始化所有必要的参数并推导出抛物线方程4x 2的梯度函数。x 2的导数是2x,所以抛物线方程4x 2的导数将是8x。

x 0 = 3 (x的随机初始化)

learning_rate = 0.01 (确定向局部最小值移动时的步长)

gradient =

(计算梯度函数)

(计算梯度函数)

第2步:让我们进行3次梯度下降的迭代。

在每次迭代中,根据梯度下降公式持续更新x的值。

Iteration 1:

x1 = x0 - (learning_rate * gradient)

x1 = 3 - (0.01 * (8 * 3))

x1 = 3 - 0.24

x1 = 2.76

Iteration 2:

x2 = x1 - (learning_rate * gradient)

x2 = 2.76 - (0.01 * (8 * 2.76))

x2 = 2.76 - 0.2208

x2 = 2.5392

Iteration 3:

x3 = x2 - (learning_rate * gradient)

x3 = 2.5392 - (0.01 * (8 * 2.5392))

x3 = 2.5392 - 0.203136

x3 = 2.3360

从以上三次梯度下降的迭代中,我们可以注意到x的值是逐次递减的,并且通过运行梯度下降更多的迭代,会慢慢收敛到0(局部最小值)。现在你可能有一个问题,我们应该运行多少次梯度下降?

我们可以设置一个停止阈值,即当x的前值和现值的差值小于停止阈值时,我们就停止迭代。当涉及到机器学习算法和深度学习算法的梯度下降的实现时,我们试图在算法中使用梯度下降来最小化成本函数。现在我们已经清楚了梯度下降的内部工作,让我们来看看梯度下降的python实现,我们将最小化线性回归算法的成本函数并找到最佳拟合线。在我们的例子中,参数如下所述。

预测函数

线性回归算法的预测函数是一个线性方程,由y=wx+b给出。

prediction_function (y) = (w * x) + b

Here, x is the independent variable

y is the dependent variable

w is the weight associated with input variable

b is the bias

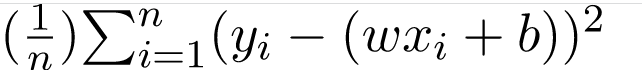

成本函数

成本函数是用来计算基于所做预测的损失的。在线性回归中,我们使用平均平方误差来计算损失。平均平方误差是实际值和预测值之间的平方差之和。

Cost Function (J) =

这里,n是样本的数量

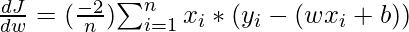

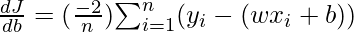

部分衍生品(梯度)

使用成本函数计算权重和偏置的部分导数。我们得到

参数更新

通过减去学习率及其各自梯度的乘法来更新权重和偏置。

w = w - (learning_rate * (dJ/dw))

b = b - (learning_rate * (dJ/db))

梯度下降的Python实现

在实现部分,我们将编写两个函数,一个是成本函数,将实际输出和预测输出作为输入并返回损失,第二个是实际的梯度下降函数,将自变量、目标变量作为输入并使用梯度下降算法找到最佳拟合线。迭代次数、学习率和停止阈值是梯度下降算法的调整参数,可以由用户调整。在主函数中,我们将初始化线性相关的随机数据,并在数据上应用梯度下降算法来寻找最佳拟合线。通过使用梯度下降算法找到的最佳权重和偏置后来被用来在主函数中绘制最佳拟合线。迭代指定了必须进行参数更新的次数,停止阈值是停止梯度下降算法的两个连续迭代之间损失的最小变化。

# Importing Libraries

import numpy as np

import matplotlib.pyplot as plt

def mean_squared_error(y_true, y_predicted):

# Calculating the loss or cost

cost = np.sum((y_true-y_predicted)**2) / len(y_true)

return cost

# Gradient Descent Function

# Here iterations, learning_rate, stopping_threshold

# are hyperparameters that can be tuned

def gradient_descent(x, y, iterations = 1000, learning_rate = 0.0001,

stopping_threshold = 1e-6):

# Initializing weight, bias, learning rate and iterations

current_weight = 0.1

current_bias = 0.01

iterations = iterations

learning_rate = learning_rate

n = float(len(x))

costs = []

weights = []

previous_cost = None

# Estimation of optimal parameters

for i in range(iterations):

# Making predictions

y_predicted = (current_weight * x) + current_bias

# Calculationg the current cost

current_cost = mean_squared_error(y, y_predicted)

# If the change in cost is less than or equal to

# stopping_threshold we stop the gradient descent

if previous_cost and abs(previous_cost-current_cost)<=stopping_threshold:

break

previous_cost = current_cost

costs.append(current_cost)

weights.append(current_weight)

# Calculating the gradients

weight_derivative = -(2/n) * sum(x * (y-y_predicted))

bias_derivative = -(2/n) * sum(y-y_predicted)

# Updating weights and bias

current_weight = current_weight - (learning_rate * weight_derivative)

current_bias = current_bias - (learning_rate * bias_derivative)

# Printing the parameters for each 1000th iteration

print(f"Iteration {i+1}: Cost {current_cost}, Weight \

{current_weight}, Bias {current_bias}")

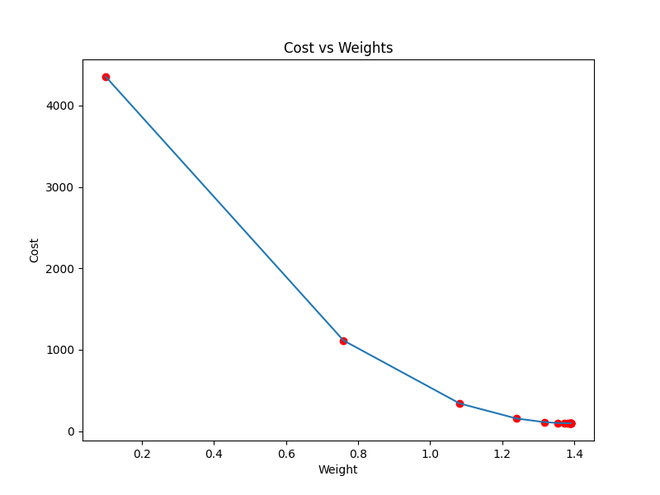

# Visualizing the weights and cost at for all iterations

plt.figure(figsize = (8,6))

plt.plot(weights, costs)

plt.scatter(weights, costs, marker='o', color='red')

plt.title("Cost vs Weights")

plt.ylabel("Cost")

plt.xlabel("Weight")

plt.show()

return current_weight, current_bias

def main():

# Data

X = np.array([32.50234527, 53.42680403, 61.53035803, 47.47563963, 59.81320787,

55.14218841, 52.21179669, 39.29956669, 48.10504169, 52.55001444,

45.41973014, 54.35163488, 44.1640495 , 58.16847072, 56.72720806,

48.95588857, 44.68719623, 60.29732685, 45.61864377, 38.81681754])

Y = np.array([31.70700585, 68.77759598, 62.5623823 , 71.54663223, 87.23092513,

78.21151827, 79.64197305, 59.17148932, 75.3312423 , 71.30087989,

55.16567715, 82.47884676, 62.00892325, 75.39287043, 81.43619216,

60.72360244, 82.89250373, 97.37989686, 48.84715332, 56.87721319])

# Estimating weight and bias using gradient descent

estimated_weight, eatimated_bias = gradient_descent(X, Y, iterations=2000)

print(f"Estimated Weight: {estimated_weight}\nEstimated Bias: {eatimated_bias}")

# Making predictions using estimated parameters

Y_pred = estimated_weight*X + eatimated_bias

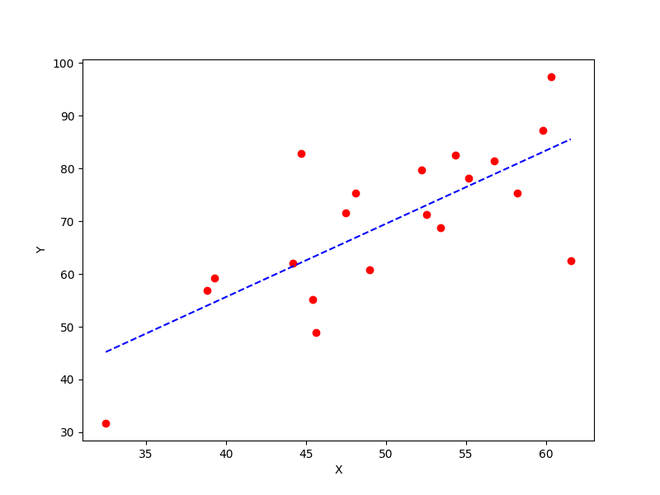

# Plotting the regression line

plt.figure(figsize = (8,6))

plt.scatter(X, Y, marker='o', color='red')

plt.plot([min(X), max(X)], [min(Y_pred), max(Y_pred)], color='blue',markerfacecolor='red',

markersize=10,linestyle='dashed')

plt.xlabel("X")

plt.ylabel("Y")

plt.show()

if __name__=="__main__":

main()

输出:

Iteration 1: Cost 4352.088931274409, Weight 0.7593291142562117, Bias 0.02288558130709

Iteration 2: Cost 1114.8561474350017, Weight 1.081602958862324, Bias 0.02918014748569513

Iteration 3: Cost 341.42912086804455, Weight 1.2391274084945083, Bias 0.03225308846928192

Iteration 4: Cost 156.64495290904443, Weight 1.3161239281746984, Bias 0.03375132986012604

Iteration 5: Cost 112.49704004742098, Weight 1.3537591652024805, Bias 0.034479873154934775

Iteration 6: Cost 101.9493925395456, Weight 1.3721549833978113, Bias 0.034832195392868505

Iteration 7: Cost 99.4293893333546, Weight 1.3811467575154601, Bias 0.03500062439068245

Iteration 8: Cost 98.82731958262897, Weight 1.3855419247507244, Bias 0.03507916814736111

Iteration 9: Cost 98.68347500997261, Weight 1.3876903144657764, Bias 0.035113776874486774

Iteration 10: Cost 98.64910780902792, Weight 1.3887405007983562, Bias 0.035126910596389935

Iteration 11: Cost 98.64089651459352, Weight 1.389253895811451, Bias 0.03512954755833985

Iteration 12: Cost 98.63893428729509, Weight 1.38950491235671, Bias 0.035127053821718185

Iteration 13: Cost 98.63846506273883, Weight 1.3896276808137857, Bias 0.035122052266051224

Iteration 14: Cost 98.63835254057648, Weight 1.38968776283053, Bias 0.03511582492978764

Iteration 15: Cost 98.63832524036214, Weight 1.3897172043139192, Bias 0.03510899846107016

Iteration 16: Cost 98.63831830104695, Weight 1.389731668997059, Bias 0.035101879159522745

Iteration 17: Cost 98.63831622628217, Weight 1.389738813163012, Bias 0.03509461674147458

Estimated Weight: 1.389738813163012

Estimated Bias: 0.03509461674147458

成本函数接近局部最小值

使用梯度下降法得到的最佳拟合线

极客教程

极客教程