Tensorflow中的XOR实现

在这篇文章中,我们将学习如何在Tensorflow中实现一个XOR门。在我们进入Tensorflow实现之前,我们先看看XOR门的真值表,以深入了解XOR。

| X | Y | X(XOR)Y |

|---|---|---|

| 0 | 0 | 0 |

| 0 | 1 | 1 |

| 1 | 0 | 1 |

| 1 | 1 | 0 |

从上面的真值表中,我们可以知道,只有当其中一个输入是1的时候,门的输出才是1,如果两个输入都是相同的,那么输出就是0。

步骤

我们将从使用tensorflow实现XOR开始。

第1步:导入所有需要的库。这里我们使用的是tensorflow 和numpy。

import tensorflow.compat.v1 as tf

tf.disable_v2_behaviour()

import numpy as np

步骤2:为输入和输出创建占位符。输入将是(4 X 2)的形状,输出将是(4 × 1)的形状。

X = tf.placeholder(dtype=tf.float32, shape=(4,2))

Y = tf.placeholder(dtype=tf.float32, shape=(4,1))

第3步:创建训练输入和输出。

INPUT_XOR = [[0,0],[0,1],[1,0],[1,1]]

OUTPUT_XOR = [[0],[1],[1],[0]]

第四步:给出一个标准的学习率和模型应该训练的epochs的数量。

learning_rate = 0.01

epochs = 10000

第5步:为模型创建一个隐藏层。隐蔽层有权重和偏置。隐蔽层的操作是将提供的输入与权重相乘,然后在乘积上加上偏置。然后把这个答案交给Relu激活函数,给下一层提供输出。

with tf.variable_scope(‘hidden’):

h_w = tf.Variable(tf.truncated_normal([2, 2]), name=’weights’)

h_b = tf.Variable(tf.truncated_normal([4, 2]), name=’biases’)

h = tf.nn.relu(tf.matmul(X, h_w) + h_b)

第六步:为模型创建一个输出层。输出层与隐藏层类似,具有权重和偏置,并具有相同的功能,但我们使用Sigmoid激活函数来获得0和1之间的输出,而不是Relu激活。

with tf.variable_scope(‘output’):

o_w = tf.Variable(tf.truncated_normal([2, 1]), name=’weights’)

o_b = tf.Variable(tf.truncated_normal([4, 1]), name=’biases’)

Y_estimation = tf.nn.sigmoid(tf.matmul(h, o_w) + o_b)

第7步:创建一个损失/成本函数。这将计算出模型在给定数据上训练的成本。这里我们做预测输出值和实际输出值的RMSE。RMSE–均方根误差。

with tf.variable_scope('cost'):

cost = tf.reduce_mean(tf.squared_difference(Y_estimation, Y))

第8步:创建一个训练变量,用给定的成本/损失函数和ADAM优化器来训练模型,以使损失最小化。

with tf.variable_scope('train'):

train = tf.train.AdamOptimizer(learning_rate).minimize(cost)

第9步:现在所有需要的东西都被初始化了,我们将启动一个Tensorflow会话,通过初始化上面声明的所有变量来开始训练。

with tf.Session() as session:

session.run(tf.global_variables_initializer())

print("Training Started")

第10步:训练模型并给出预测结果。在这里,我们对输入和输出进行训练,因为我们正在进行监督学习。然后,我们计算每1000个epochs的成本,最后预测输出并与实际输出进行测试。

log_count_frac = epochs/10

for epoch in range(epochs):

# Training the base network

session.run(train, feed_dict={X: INPUT_XOR, Y:OUTPUT_XOR})

# log training parameters

# Print cost for every 1000 epochs

if epoch % log_count_frac == 0:

cost_results = session.run(cost, feed_dict={X: INPUT_XOR, Y:OUTPUT_XOR})

print("Cost of Training at epoch {0} is {1}".format(epoch, cost_results))

print("Training Completed !")

Y_test = session.run(Y_estimation, feed_dict={X:INPUT_XOR})

print(np.round(Y_test, decimals=1))

以下是完整的实现方案:

# import tensorflow library

# Since we'll be using functionalities

# of tensorflow V1 Let us import Tensorflow v1

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

import numpy as np

# Create placeholders for input X and output Y

X = tf.placeholder(dtype=tf.float32, shape=(4, 2))

Y = tf.placeholder(dtype=tf.float32, shape=(4, 1))

# Give training input and label

INPUT_XOR = [[0,0],[0,1],[1,0],[1,1]]

OUTPUT_XOR = [[0],[1],[1],[0]]

# Give a standard learning rate and the number

# of epochs the model has to train for.

learning_rate = 0.01

epochs = 10000

# Create/Initialize a Hidden Layer variable

with tf.variable_scope('hidden'):

# Initialize weights and biases for the

# hidden layer randomly whose mean=0 and

# std_dev=1

h_w = tf.Variable(tf.truncated_normal([2, 2]), name='weights')

h_b = tf.Variable(tf.truncated_normal([4, 2]), name='biases')

# Pass the matrix multiplied Input and

# weights added with Bias to the relu

# activation function

h = tf.nn.relu(tf.matmul(X, h_w) + h_b)

# Create/Initialize an Output Layer variable

with tf.variable_scope('output'):

# Initialize weights and biases for the

# output layer randomly whose mean=0 and

# std_dev=1

o_w = tf.Variable(tf.truncated_normal([2, 1]), name='weights')

o_b = tf.Variable(tf.truncated_normal([4, 1]), name='biases')

# Pass the matrix multiplied hidden layer

# Input and weights added with Bias

# to a sigmoid activation function

Y_estimation = tf.nn.sigmoid(tf.matmul(h, o_w) + o_b)

# Create/Initialize Loss function variable

with tf.variable_scope('cost'):

# Calculate cost by taking the Root Mean

# Square between the estimated Y value

# and the actual Y value

cost = tf.reduce_mean(tf.squared_difference(Y_estimation, Y))

# Create/Initialize Training model variable

with tf.variable_scope('train'):

# Train the model with ADAM Optimizer

# with the previously initialized learning

# rate and the cost from the previous variable

train = tf.train.AdamOptimizer(learning_rate).minimize(cost)

# Start a Tensorflow Session

with tf.Session() as session:

# initialize the session variables

session.run(tf.global_variables_initializer())

print("Training Started")

# log count

log_count_frac = epochs/10

for epoch in range(epochs):

# Training the base network

session.run(train, feed_dict={X: INPUT_XOR, Y:OUTPUT_XOR})

# log training parameters

# Print cost for every 1000 epochs

if epoch % log_count_frac == 0:

cost_results = session.run(cost, feed_dict={X: INPUT_XOR, Y:OUTPUT_XOR})

print("Cost of Training at epoch {0} is {1}".format(epoch, cost_results))

print("Training Completed !")

Y_test = session.run(Y_estimation, feed_dict={X:INPUT_XOR})

print(np.round(Y_test, decimals=1))

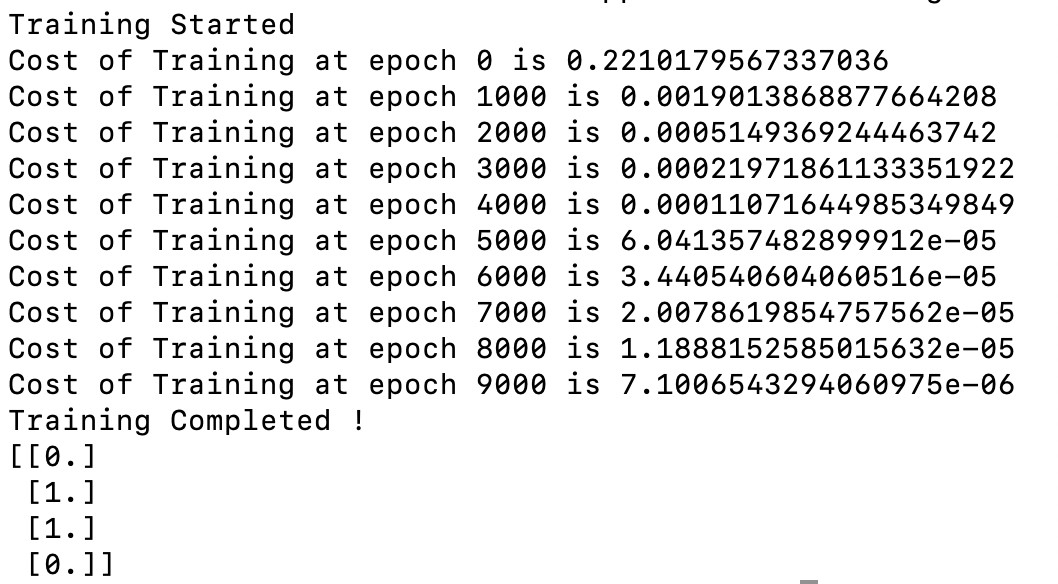

输出:

上述程序的输出

极客教程

极客教程