使用TensorFlow进行图像分割

图像分割指的是将一个单一类别注释到不同的像素组的任务。虽然输入是一幅图像,但输出是一个掩码,在该图像中画出形状的区域。图像分割在医学图像分析、自动驾驶汽车、卫星图像分析等领域有广泛的应用。有不同类型的图像分割技术,如语义分割、实例分割等。总的来说,图像分割的关键目标是在像素层面上识别和理解图像中的内容。

对于图像分割任务,我们将使用 “The Oxford-IIIT Pet Datase t”,这是一个免费使用的数据集。他们有37个类别的宠物数据集,每个类别大约有200张图像。这些图像在比例、姿势和光线方面有很大的变化。所有的图像都有一个相关的地面真实注释,包括品种、头部ROI和像素级的trimap分割。每个像素都被归入三个类别中的一个。

1.属于宠物的像素

2.与宠物接壤的像素

3.像素既不属于第1类也不属于第2类

导入

让我们首先导入运行我们的程序所需的所有依赖和包。

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import os

import seaborn as sns

from tensorflow import keras

import tensorflow as tf

import tensorflow_datasets as tfds

import cv2

import PIL

from IPython.display import clear_output

下载数据集

给定的数据集在TensorFlow数据集中是现成的。只要使用tfds.load()函数从那里下载即可。

dataset, info = tfds.load('oxford_iiit_pet:3.*.*', with_info=True)

数据处理

首先,我们将对[0,1]范围内的像素值进行标准化。为此,我们将把每个像素值除以255。来自TensorFlow的数据集已经被分为训练和测试两部分。

def normalize(input_image, input_mask):

# Normalize the pixel range values between [0:1]

img = tf.cast(input_image, dtype=tf.float32) / 255.0

input_mask -= 1

return img, input_mask

@tf.function

def load_train_ds(dataset):

img = tf.image.resize(dataset['image'],

size=(width, height))

mask = tf.image.resize(dataset['segmentation_mask'],

size=(width, height))

if tf.random.uniform(()) > 0.5:

img = tf.image.flip_left_right(img)

mask = tf.image.flip_left_right(mask)

img, mask = normalize(img, mask)

return img, mask

@tf.function

def load_test_ds(dataset):

img = tf.image.resize(dataset['image'],

size=(width, height))

mask = tf.image.resize(dataset['segmentation_mask'],

size=(width, height))

img, mask = normalize(img, mask)

return img, mask

现在我们将设置一些常量值,如缓冲区大小、输入高度和宽度等。

TRAIN_LENGTH = info.splits['train'].num_examples

# Batch size is the number of examples used in one training example.

# It is mostly a power of 2

BATCH_SIZE = 64

BUFFER_SIZE = 1000

STEPS_PER_EPOCH = TRAIN_LENGTH // BATCH_SIZE

# For VGG16 this is the input size

width, height = 224, 224

现在,让我们把训练和测试数据加载到不同的变量中,并在批处理完成后进行数据扩增。

train = dataset['train'].map(

load_train_ds, num_parallel_calls=tf.data.AUTOTUNE)

test = dataset['test'].map(load_test_ds)

train_ds = train.cache().shuffle(BUFFER_SIZE).batch(BATCH_SIZE).repeat()

train_ds = train_ds.prefetch(buffer_size=tf.data.AUTOTUNE)

test_ds = test.batch(BATCH_SIZE)

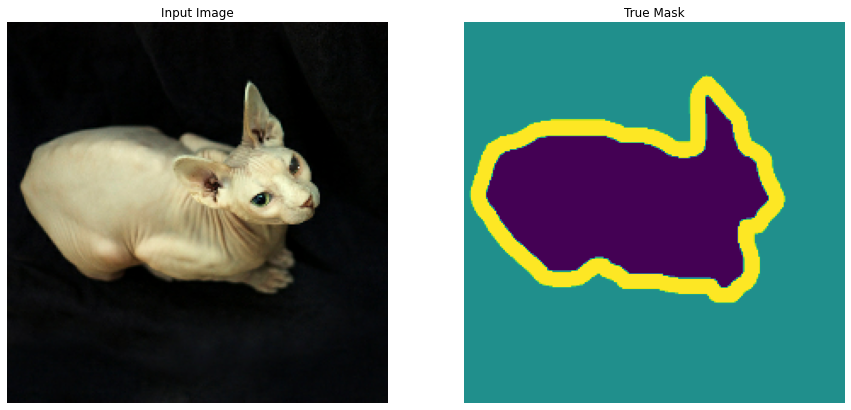

可视化数据

可视化一个图像例子和其对应的数据集的掩码。

def display_images(display_list):

plt.figure(figsize=(15, 15))

title = ['Input Image', 'True Mask',

'Predicted Mask']

for i in range(len(display_list)):

plt.subplot(1, len(display_list), i+1)

plt.title(title[i])

plt.imshow(keras.preprocessing.image.array_to_img(display_list[i]))

plt.axis('off')

plt.show()

for img, mask in train.take(1):

sample_image, sample_mask = img, mask

display_list = sample_image, sample_mask

display_images(display_list)

输出:

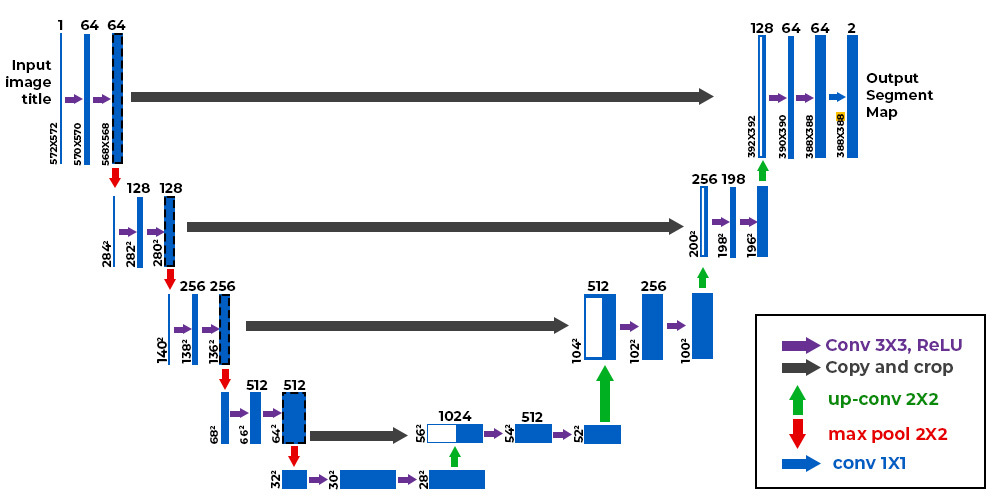

U-Net

U-Net是一个CNN架构,用于大多数分割任务。它由一个收缩和扩展路径组成,这使它被命名为UNet。收缩路径包括一个卷积层,接着是ReLu,然后是最大集合层。沿着收缩路径,特征被提取出来,空间信息被减少。沿着扩展路径,一系列的向上卷积和高分辨率特征的串联是由收缩路径完成的。

在这个项目中,我们将使用VGG16模型的编码器,因为它已经在ImageNet数据集上进行了训练,并且已经学会了一些特征。如果使用原始的UNet编码器,它将从头开始学习一切,并将花费更多时间。

base_model = keras.applications.vgg16.VGG16(

include_top=False, input_shape=(width, height, 3))

layer_names = [

'block1_pool',

'block2_pool',

'block3_pool',

'block4_pool',

'block5_pool',

]

base_model_outputs = [base_model.get_layer(

name).output for name in layer_names]

base_model.trainable = False

VGG_16 = tf.keras.models.Model(base_model.input,

base_model_outputs)

现在定义解码器

def fcn8_decoder(convs, n_classes):

f1, f2, f3, f4, p5 = convs

n = 4096

c6 = tf.keras.layers.Conv2D(

n, (7, 7), activation='relu', padding='same',

name="conv6")(p5)

c7 = tf.keras.layers.Conv2D(

n, (1, 1), activation='relu', padding='same',

name="conv7")(c6)

f5 = c7

# upsample the output of the encoder

# then crop extra pixels that were introduced

o = tf.keras.layers.Conv2DTranspose(n_classes, kernel_size=(

4, 4), strides=(2, 2), use_bias=False)(f5)

o = tf.keras.layers.Cropping2D(cropping=(1, 1))(o)

# load the pool 4 prediction and do a 1x1

# convolution to reshape it to the same shape of `o` above

o2 = f4

o2 = (tf.keras.layers.Conv2D(n_classes, (1, 1),

activation='relu',

padding='same'))(o2)

# add the results of the upsampling and pool 4 prediction

o = tf.keras.layers.Add()([o, o2])

# upsample the resulting tensor of the operation you just did

o = (tf.keras.layers.Conv2DTranspose(

n_classes, kernel_size=(4, 4), strides=(2, 2),

use_bias=False))(o)

o = tf.keras.layers.Cropping2D(cropping=(1, 1))(o)

# load the pool 3 prediction and do a 1x1

# convolution to reshape it to the same shape of `o` above

o2 = f3

o2 = (tf.keras.layers.Conv2D(n_classes, (1, 1),

activation='relu',

padding='same'))(o2)

# add the results of the upsampling and pool 3 prediction

o = tf.keras.layers.Add()([o, o2])

# upsample up to the size of the original image

o = tf.keras.layers.Conv2DTranspose(

n_classes, kernel_size=(8, 8), strides=(8, 8),

use_bias=False)(o)

# append a softmax to get the class probabilities

o = tf.keras.layers.Activation('softmax')(o)

return o

将所有的东西结合起来,创建一个最终的模型,并将其编译。

def segmentation_model():

inputs = keras.layers.Input(shape=(width, height, 3))

convs = VGG_16(inputs)

outputs = fcn8_decoder(convs, 3)

model = tf.keras.Model(inputs=inputs, outputs=outputs)

return model

opt = keras.optimizers.Adam()

model = segmentation_model()

model.compile(optimizer=opt,

loss=tf.keras.losses.SparseCategoricalCrossentropy(

from_logits=True),

metrics=['accuracy'])

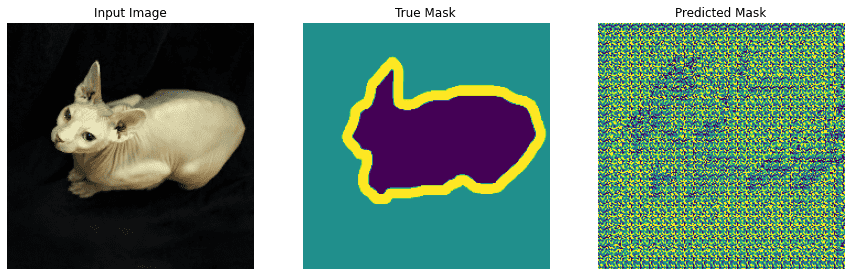

创建预测掩码工具

我们将生成一个函数,它将在一行中向我们显示真实图像的真实掩码和预测的掩码。它更像是一个可视化的实用函数

def create_mask(pred_mask):

pred_mask = tf.argmax(pred_mask, axis=-1)

pred_mask = pred_mask[..., tf.newaxis]

return pred_mask[0]

def show_predictions(dataset=None, num=1):

if dataset:

for image, mask in dataset.take(num):

pred_mask = model.predict(image)

display_images([image[0], mask[0], create_mask(pred_mask)])

else:

display_images([sample_image, sample_mask,

create_mask(model.predict(sample_image[tf.newaxis, ...]))])

show_predictions()

输出:

训练

由于所有需要的函数和模型都已创建,现在我们将训练模型。我们将对模型进行20次的训练,并进行5次的验证。

EPOCHS = 20

VAL_SUBSPLITS = 5

VALIDATION_STEPS = info.splits['test'].num_examples//BATCH_SIZE//VAL_SUBSPLITS

model_history = model.fit(train_ds, epochs=EPOCHS,

steps_per_epoch=STEPS_PER_EPOCH,

validation_steps=VALIDATION_STEPS,

validation_data=test_ds)

输出:

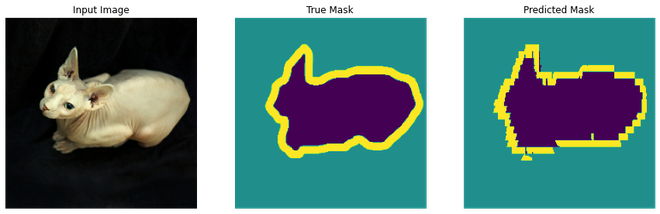

20个历时后的预测

Metrics

对于分割任务,我们考虑了两类指标。第一种是Intersection over Union(IOU)和dice score。它们各自的计算公式如下。

IoU是预测分割和真实掩码之间的重叠面积除以两者的结合。

def compute_metrics(y_true, y_pred):

'''

Computes IOU and Dice Score.

Args:

y_true (tensor) - ground truth label map

y_pred (tensor) - predicted label map

'''

class_wise_iou = []

class_wise_dice_score = []

smoothening_factor = 0.00001

for i in range(3):

intersection = np.sum((y_pred == i) * (y_true == i))

y_true_area = np.sum((y_true == i))

y_pred_area = np.sum((y_pred == i))

combined_area = y_true_area + y_pred_area

iou = (intersection + smoothening_factor) / \

(combined_area - intersection + smoothening_factor)

class_wise_iou.append(iou)

dice_score = 2 * ((intersection + smoothening_factor) /

(combined_area + smoothening_factor))

class_wise_dice_score.append(dice_score)

return class_wise_iou, class_wise_dice_score

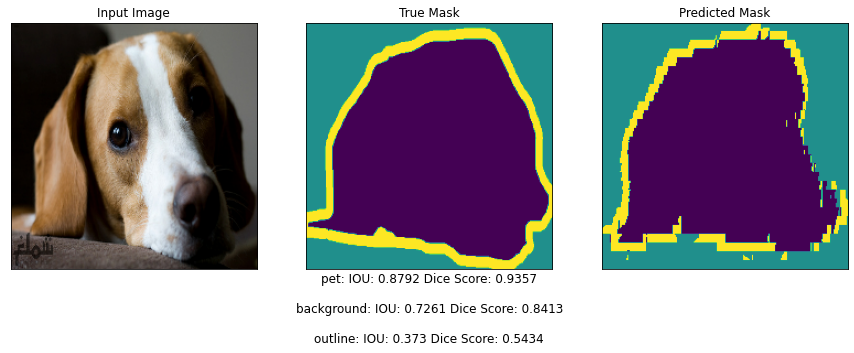

用指标进行模型预测

def get_test_image_and_annotation_arrays():

'''

Unpacks the test dataset and 返回

the input images and segmentation masks

'''

ds = test_ds.unbatch()

ds = ds.batch(info.splits['test'].num_examples)

images = []

y_true_segments = []

for image, annotation in ds.take(1):

y_true_segments = annotation.numpy()

images = image.numpy()

y_true_segments = y_true_segments[:(

info.splits['test'].num_examples - (info.splits['test']

.num_examples % BATCH_SIZE))]

images = images[:(info.splits['test'].num_examples -

(info.splits['test'].num_examples % BATCH_SIZE))]

return images, y_true_segments

y_true_images, y_true_segments = get_test_image_and_annotation_arrays()

integer_slider = 2574

img = np.reshape(y_true_images[integer_slider], (1, width, height, 3))

y_pred_mask = model.predict(img)

y_pred_mask = create_mask(y_pred_mask)

y_pred_mask.shape

def display_prediction(display_list, display_string):

plt.figure(figsize=(15, 15))

title = ['Input Image', 'True Mask', 'Predicted Mask']

for i in range(len(display_list)):

plt.subplot(1, len(display_list), i+1)

plt.title(title[i])

plt.xticks([])

plt.yticks([])

if i == 1:

plt.xlabel(display_string, fontsize=12)

plt.imshow(keras.preprocessing.image.array_to_img(display_list[i]))

plt.show()

iou, dice_score = compute_metrics(

y_true_segments[integer_slider], y_pred_mask.numpy())

display_list = [y_true_images[integer_slider],

y_true_segments[integer_slider], y_pred_mask]

display_string_list = ["{}: IOU: {} Dice Score: {}".format(class_names[idx],

i, dc) for idx, (i, dc) in

enumerate(zip(np.round(iou, 4), np.round(dice_score, 4)))]

display_string = "\n\n".join(display_string_list)

# showing predictions with metrics

display_prediction(display_list, display_string)

输出:

因此,我们最终使用TensorFlow对牛津IIIT宠物数据集进行了图像分割。

极客教程

极客教程