本节介绍如何训练CNN,一个简单的演练,为CNNs派生反向传播,并在Python中从头实现它。

在这篇文章中,我们将深入介绍卷积神经网络(CNNs)中大多数介绍都缺乏的东西:如何训练CNN,包括派生梯度、从零开始实现backprop(仅使用numpy),以及最终构建完整的训练管道!

本文假设您对CNNs有基本的了解。我对CNNs的介绍卷积神经网络(CNN)简介涵盖了您需要了解的所有内容,因此我强烈建议您首先阅读这些内容。如果您已经阅读了卷积神经网络(CNN)简介,欢迎回来!

这篇文章的一部分还假定了你对多元微积分的基本知识了解。如果你愿意,你可以跳过这些部分,但我建议你阅读它们,即使你不是什么都懂。我们将在获得结果时逐步编写代码,甚至表面级别的理解也会有所帮助。

系好安全带!我们的文章开始了。

舞台设置

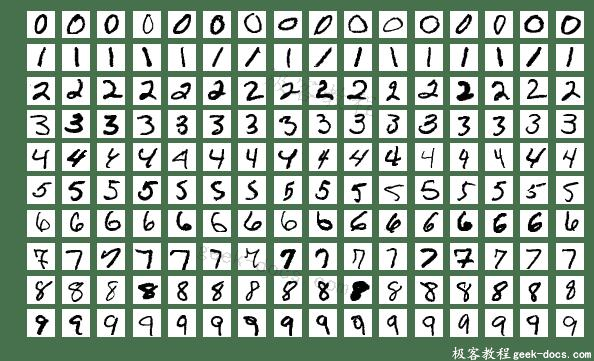

我们使用CNN解决MNIST手写数字分类问题:

来自MNIST数据集的样本图像

我们的(简单的)CNN由Conv层、Max池化层和Softmax层组成。这是我们CNN的图表:

我们的CNN采用28×28灰度MNIST图像,输出10个概率,每个数字1个。

我们已经编写了3个类,每个层一个类:Conv3x3、MaxPool和Softmax。每个类都实现了一个forward()方法,我们用它来构建CNN的forward pass.

cnn.py:

conv = Conv3x3(8) # 28x28x1 -> 26x26x8

pool = MaxPool2() # 26x26x8 -> 13x13x8

softmax = Softmax(13 * 13 * 8, 10) # 13x13x8 -> 10

def forward(image, label):

'''

Completes a forward pass of the CNN and calculates the accuracy and

cross-entropy loss.

- image is a 2d numpy array

- label is a digit

'''

# We transform the image from [0, 255] to [-0.5, 0.5] to make it easier

# to work with. This is standard practice.

out = conv.forward((image / 255) - 0.5)

out = pool.forward(out)

out = softmax.forward(out)

# Calculate cross-entropy loss and accuracy. np.log() is the natural log.

loss = -np.log(out[label])

acc = 1 if np.argmax(out) == label else 0

return out, loss, acc

您可以在浏览器中查看代码或运行CNN。它也可以在Github上使用。

下面是我们CNN现在的输出:

MNIST CNN initialized!

[Step 100] Past 100 steps: Average Loss 2.302 | Accuracy: 11%

[Step 200] Past 100 steps: Average Loss 2.302 | Accuracy: 8%

[Step 300] Past 100 steps: Average Loss 2.302 | Accuracy: 3%

[Step 400] Past 100 steps: Average Loss 2.302 | Accuracy: 12%

显然,我们想要比10%的准确率做得更好……让我们给CNN上一课。

训练概述

神经网络的训练一般分为两个阶段:

- 正向阶段,输入完全通过网络传递。

- 反向阶段,在此阶段中梯度反向传播(backprop)并更新权重。

我们将按照这个模式来训练我们的CNN。我们还将使用两个主要的特定于实现的思想:

- 在正向(forward)阶段,每一层都将缓存反向阶段所需的任何数据(如输入、中间值等)。这意味着任何反向阶段之前都必须有一个相应的正向阶段。

- 在反向(backward)阶段,每一层都将接收到梯度,并返回梯度。它将收到相对于输出的损失梯度\frac{\partial L}{\partial out},并返回相对于其输入的损失梯度\frac{\partial L}{\partial in}。

这两个想法将有助于保持我们的训练执行干净和有组织。了解原因的最好方法可能是查看代码。训练我们的CNN最终会是这样的:

# Feed forward

out = conv.forward((image / 255) - 0.5)

out = pool.forward(out)

out = softmax.forward(out)

# Calculate initial gradient

gradient = np.zeros(10)

# ...

# Backprop

gradient = softmax.backprop(gradient)

gradient = pool.backprop(gradient)

gradient = conv.backprop(gradient)

看到它看起来多漂亮多干净了吗?现在想象一下,构建一个50层而不是3层的网络——它甚至比拥有良好的系统更有价值。

Backprop: Softmax

我们将从结尾开始,然后一步步开始,因为这就是backprop的工作原理。首先,回顾交叉熵损失: L=−ln(p_c)

其中p_c是正确的c类的预测概率(换句话说,我们当前图像的实际数字是多少)。

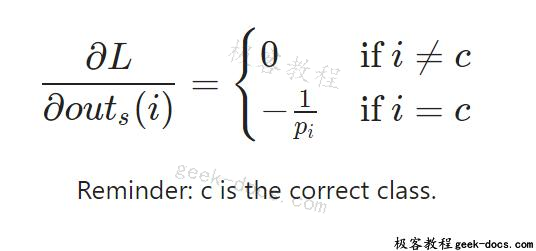

我们需要计算的第一件事是Softmax层反向阶段的输入,\frac{\partial L}{\partial out_s},其中out_s是Softmax层的输出:一个10个概率的向量.这很简单,因为只有p_i出现在损失方程中:

这是你看到上面提到的我们的初始梯度:

# Calculate initial gradient

gradient = np.zeros(10)

gradient[label] = -1 / out[label]

我们几乎准备好实现我们的第一个反向阶段-我们只需要首先执行我们前面讨论的正向阶段缓存:

softmax.py

class Softmax:

# ...

def forward(self, input):

'''

Performs a forward pass of the softmax layer using the given input.

Returns a 1d numpy array containing the respective probability values.

- input can be any array with any dimensions.

'''

self.last_input_shape = input.shape

input = input.flatten()

self.last_input = input

input_len, nodes = self.weights.shape

totals = np.dot(input, self.weights) + self.biases

self.last_totals = totals

exp = np.exp(totals)

return exp / np.sum(exp, axis=0)

我们在这里缓存了3个对实现反向阶段有用的东西:

– input的形状,然后我们把它压平。

– 压平后的input。

– 传递到softmax激活的值:total。

有了这个方法,我们可以开始推导反向阶段的梯度。我们已经推导出Softmax反向阶段的输入:\frac{\partial L}{\partial out_s}。我们可以使用\frac{\partial L}{\partial out_s}的一个事实是这个正确的分类c是非零的。

那就意味着除了out_s(c)之外我们可以忽略任何东西。

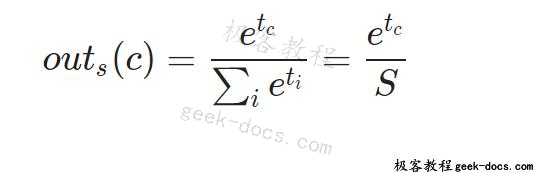

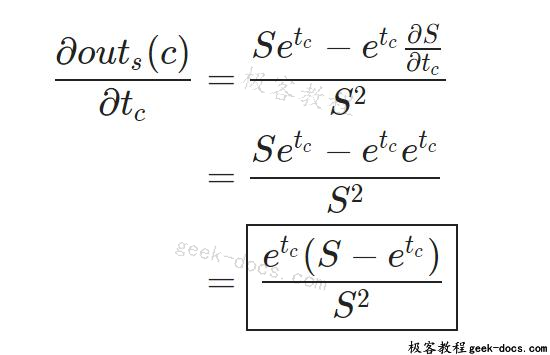

首先,让我们计算out_s(c)相对于总数的梯度(传递到softmax激活的值)。让t_i作为i类的总数。我们因此可以将out_s(c)写成:

这里S= \sum_{i}{e^{t_i}}。

现在让我们考虑一些类k,例如k\not=c,我们可以写out_s(c)为下面这样:

out_s(c) = e^{t{_c}} S^{-1}

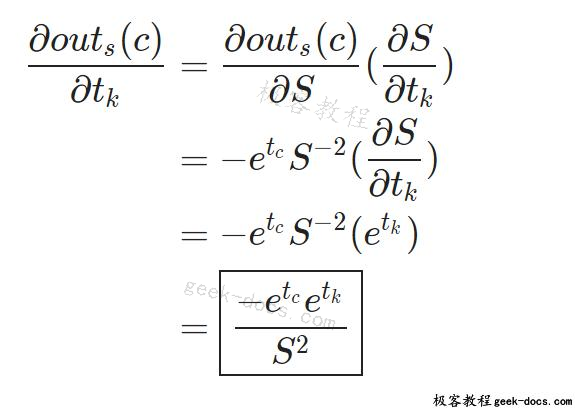

用链式法则来推导:

请注意,这里我们是假设k\not=c。

现在我们对c求导,这次用除法法则(因为out_s(c)的分子上有个e^{t_c}):

唷。这是整篇文章中最难的微积分部分——从这里开始只会变得更容易!让我们开始实现这个:

softmax.py

class Softmax:

# ...

def backprop(self, d_L_d_out):

'''

Performs a backward pass of the softmax layer.

Returns the loss gradient for this layer's inputs.

- d_L_d_out is the loss gradient for this layer's outputs.

'''

# We know only 1 element of d_L_d_out will be nonzero

for i, gradient in enumerate(d_L_d_out):

if gradient == 0:

continue

# e^totals

t_exp = np.exp(self.last_totals)

# Sum of all e^totals

S = np.sum(t_exp)

# Gradients of out[i] against totals

d_out_d_t = -t_exp[i] * t_exp / (S ** 2)

d_out_d_t[i] = t_exp[i] * (S - t_exp[i]) / (S ** 2)

# ... to be continued

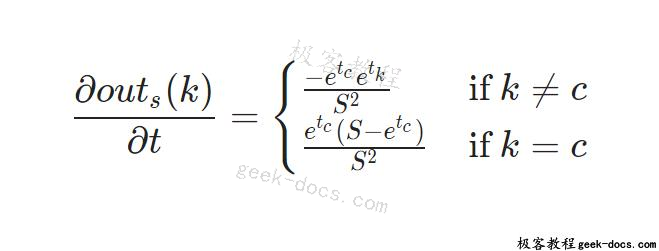

还记得\frac{\partial L}{\partial out_s}如何只对正确的类c是非零的吗?我们从寻找c开始在d_L_d_out中寻找非零梯度。一旦我们发现,我们计算梯度\frac{\partial out_s(i)}{\partial t} (d_out_d_total)使用我们从上面得到的结果:

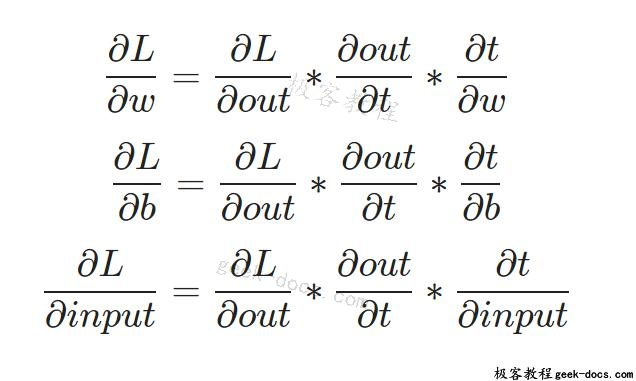

让我们继续。我们最终希望权重损失梯度、偏差和输入:

- 我们将使用权重梯度\frac{\partial L}{\partial w}来更新图层的权重。

- 我们将使用偏差梯度\frac{\partial L}{\partial b}来更新我们图层的偏差。

- 我们将从backprop()方法返回输入梯度\frac{\partial L}{\partial input},以便下一层可以使用它。这就是我们在培训概述部分中讨论的返回梯度!

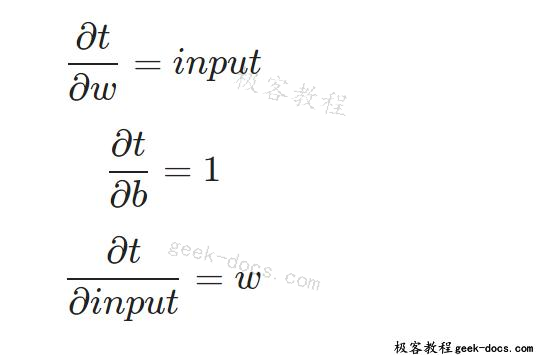

要计算这3个损失梯度,我们首先需要得到另外3个结果:总计相对于权重、偏差和输入的梯度。相关方程为:

这些梯度很简单。

把所有相关的放在一起:

把它写进代码里就不那么简单了:

softmax.py

class Softmax:

# ...

def backprop(self, d_L_d_out):

'''

Performs a backward pass of the softmax layer.

Returns the loss gradient for this layer's inputs.

- d_L_d_out is the loss gradient for this layer's outputs.

'''

# We know only 1 element of d_L_d_out will be nonzero

for i, gradient in enumerate(d_L_d_out):

if gradient == 0:

continue

# e^totals

t_exp = np.exp(self.last_totals)

# Sum of all e^totals

S = np.sum(t_exp)

# Gradients of out[i] against totals

d_out_d_t = -t_exp[i] * t_exp / (S ** 2)

d_out_d_t[i] = t_exp[i] * (S - t_exp[i]) / (S ** 2)

# Gradients of totals against weights/biases/input

d_t_d_w = self.last_input

d_t_d_b = 1

d_t_d_inputs = self.weights

# Gradients of loss against totals

d_L_d_t = gradient * d_out_d_t

# Gradients of loss against weights/biases/input

d_L_d_w = d_t_d_w[np.newaxis].T @ d_L_d_t[np.newaxis]

d_L_d_b = d_L_d_t * d_t_d_b

d_L_d_inputs = d_t_d_inputs @ d_L_d_t

# ... to be continued

首先,我们预先计算d_L_d_t,因为我们将多次使用它。然后,我们计算每个梯度:

- d_L_d_w:我们需要二维数组来做矩阵乘法(@),但是d_t_d_w和d_L_d_t是一维数组。np.newaxis让我们可以很容易地创建一个长度为1的新轴,因此我们最终将矩阵与维度(input_len, 1)和(1,nodes)相乘。因此,d_L_d_w的最终结果将具有shape (input_len, nodes),它与self.weights相同!

- d_L_d_b:这个很简单,因为d_t_d_b是1。

- d_l_d_input:我们用维度(input_len, nodes)和(nodes, 1)相乘矩阵,得到长度为input_len的结果。

尝试通过上面的计算的小例子,特别是d_L_d_w和d_l_d_input的矩阵乘法。这是理解为什么这段代码正确计算梯度的最好方法。

计算完所有的梯度后,剩下的就是训练Softmax层了!我们将使用随机梯度下降(SGD)来更新权重和偏差,就像我在介绍神经网络时所做的那样,然后返回d_l_d_input:

softmax.py

class Softmax

# ...

def backprop(self, d_L_d_out, learn_rate):

'''

Performs a backward pass of the softmax layer.

Returns the loss gradient for this layer's inputs.

- d_L_d_out is the loss gradient for this layer's outputs.

- learn_rate is a float

'''

# We know only 1 element of d_L_d_out will be nonzero

for i, gradient in enumerate(d_L_d_out):

if gradient == 0:

continue

# e^totals

t_exp = np.exp(self.last_totals)

# Sum of all e^totals

S = np.sum(t_exp)

# Gradients of out[i] against totals

d_out_d_t = -t_exp[i] * t_exp / (S ** 2)

d_out_d_t[i] = t_exp[i] * (S - t_exp[i]) / (S ** 2)

# Gradients of totals against weights/biases/input

d_t_d_w = self.last_input

d_t_d_b = 1

d_t_d_inputs = self.weights

# Gradients of loss against totals

d_L_d_t = gradient * d_out_d_t

# Gradients of loss against weights/biases/input

d_L_d_w = d_t_d_w[np.newaxis].T @ d_L_d_t[np.newaxis]

d_L_d_b = d_L_d_t * d_t_d_b

d_L_d_inputs = d_t_d_inputs @ d_L_d_t

# Update weights / biases

self.weights -= learn_rate * d_L_d_w

self.biases -= learn_rate * d_L_d_b

return d_L_d_inputs.reshape(self.last_input_shape)

注意,我们添加了一个learn_rate参数来控制更新权重的速度。此外,在返回d_l_d_input之前,我们还必须reshape(),因为我们在向前传递时将输入压平:

softmax.py

class Softmax:

# ...

def forward(self, input):

'''

Performs a forward pass of the softmax layer using the given input.

Returns a 1d numpy array containing the respective probability values.

- input can be any array with any dimensions.

'''

self.last_input_shape = input.shape

input = input.flatten()

self.last_input = input

# ...

将其重新映射为last_input_shape可以确保该层以与初始输入相同的格式返回其输入的梯度。

试驾:Softmax Backprop

我们已经完成了第一个backprop 实现!让我们快速测试一下,看看它是否有用。我们将在卷积神经网络(CNN)介绍的的cnn.py文件中开始实现一个train()方法:

cnn.py

# Imports and setup here

# ...

def forward(image, label):

# Implementation excluded

# ...

def train(im, label, lr=.005):

'''

Completes a full training step on the given image and label.

Returns the cross-entropy loss and accuracy.

- image is a 2d numpy array

- label is a digit

- lr is the learning rate

'''

# Forward

out, loss, acc = forward(im, label)

# Calculate initial gradient

gradient = np.zeros(10)

gradient[label] = -1 / out[label]

# Backprop

gradient = softmax.backprop(gradient, lr)

# TODO: backprop MaxPool2 layer

# TODO: backprop Conv3x3 layer

return loss, acc

print('MNIST CNN initialized!')

# Train!

loss = 0

num_correct = 0

for i, (im, label) in enumerate(zip(train_images, train_labels)):

if i % 100 == 99:

print(

'[Step %d] Past 100 steps: Average Loss %.3f | Accuracy: %d%%' %

(i + 1, loss / 100, num_correct)

)

loss = 0

num_correct = 0

l, acc = train(im, label)

loss += l

num_correct += acc

运行该函数得到的结果类似于:

MNIST CNN initialized!

[Step 100] Past 100 steps: Average Loss 2.239 | Accuracy: 18%

[Step 200] Past 100 steps: Average Loss 2.140 | Accuracy: 32%

[Step 300] Past 100 steps: Average Loss 1.998 | Accuracy: 48%

[Step 400] Past 100 steps: Average Loss 1.861 | Accuracy: 59%

[Step 500] Past 100 steps: Average Loss 1.789 | Accuracy: 56%

[Step 600] Past 100 steps: Average Loss 1.809 | Accuracy: 48%

[Step 700] Past 100 steps: Average Loss 1.718 | Accuracy: 63%

[Step 800] Past 100 steps: Average Loss 1.588 | Accuracy: 69%

[Step 900] Past 100 steps: Average Loss 1.509 | Accuracy: 71%

[Step 1000] Past 100 steps: Average Loss 1.481 | Accuracy: 70%

损失在下降,准确度在上升——我们的CNN已经在学习了!

Backprop:Max pooling

不能训练Max Pooling层,因为它实际上没有任何权重,但是我们仍然需要实现一个backprop()方法来计算梯度。我们将从再次添加正向阶段缓存开始。我们只需要缓存输入:

maxpool.py

class MaxPool2:

# ...

def forward(self, input):

'''

Performs a forward pass of the maxpool layer using the given input.

Returns a 3d numpy array with dimensions (h / 2, w / 2, num_filters).

- input is a 3d numpy array with dimensions (h, w, num_filters)

'''

self.last_input = input

# More implementation

# ...

在正向传递期间,Max pooling层接受一个输入卷,通过在2×2块上选择最大值,将其宽度和高度维度减半。反向遍历的作用正好相反:我们将通过将每个梯度值赋给原始最大值在其对应的2×2块中的位置,将损失梯度的宽度和高度加倍。

这是一个例子。考虑max pooling层的这个正向阶段:

将4×4输入转换为2×2输出的正向阶段示例

同一层的反向阶段是这样的:

一个将2×2梯度转换为4×4梯度的反向示例

每个梯度值都被分配到原始最大值所在的位置,其他值都为零。

为什么Max池化层的反向阶段是这样工作的?直观地思考\frac{\partial L}{\partial inputs}应该是什么。如果输入像素在其2×2块中不是最大值,则对损失的边际影响为零,因为稍微改变该值并不会改变输出!换句话说,对于非最大像素,\frac{\partial L}{\partial input}=0。另一方面,一个最大值的输入像素会将其值传递给输出,所以\frac{\partial output}{\partial input} =1,意味着\frac{\partial L}{\partial input} = \frac{\partial L}{\partial output}。

我们可以使用在卷积神经网络(CNN)中编写的iterate_regions() helper方法快速实现这一点。我将再次附上它作为提醒:

maxpool.py

class MaxPool2:

# ...

def iterate_regions(self, image):

'''

Generates non-overlapping 2x2 image regions to pool over.

- image is a 2d numpy array

'''

h, w, _ = image.shape

new_h = h // 2

new_w = w // 2

for i in range(new_h):

for j in range(new_w):

im_region = image[(i * 2):(i * 2 + 2), (j * 2):(j * 2 + 2)]

yield im_region, i, j

def backprop(self, d_L_d_out):

'''

Performs a backward pass of the maxpool layer.

Returns the loss gradient for this layer's inputs.

- d_L_d_out is the loss gradient for this layer's outputs.

'''

d_L_d_input = np.zeros(self.last_input.shape)

for im_region, i, j in self.iterate_regions(self.last_input):

h, w, f = im_region.shape

amax = np.amax(im_region, axis=(0, 1))

for i2 in range(h):

for j2 in range(w):

for f2 in range(f):

# If this pixel was the max value, copy the gradient to it.

if im_region[i2, j2, f2] == amax[f2]:

d_L_d_input[i * 2 + i2, j * 2 + j2, f2] = d_L_d_out[i, j, f2]

return d_L_d_input

对于每个过滤器中每个2×2图像区域中的每个像素,我们将梯度从d_L_d_out复制到d_L_d_input(如果它是转发过程中的最大值)。

就是这样!最后一层。

Backprop: Conv

我们终于到了这里:通过Conv层进行反向传播是训练CNN的核心。正向阶段缓存很简单:

conv.py

class Conv3x3

# ...

def forward(self, input):

'''

Performs a forward pass of the conv layer using the given input.

Returns a 3d numpy array with dimensions (h, w, num_filters).

- input is a 2d numpy array

'''

self.last_input = input

# More implementation

# ...

关于实现的提醒:为了简单起见,我们假设conv层的输入是一个2d数组。这只适用于我们,因为我们使用它作为网络的第一层。如果我们要构建一个更大的网络,需要使用Conv3x3多次,我们必须使输入成为一个3d数组。

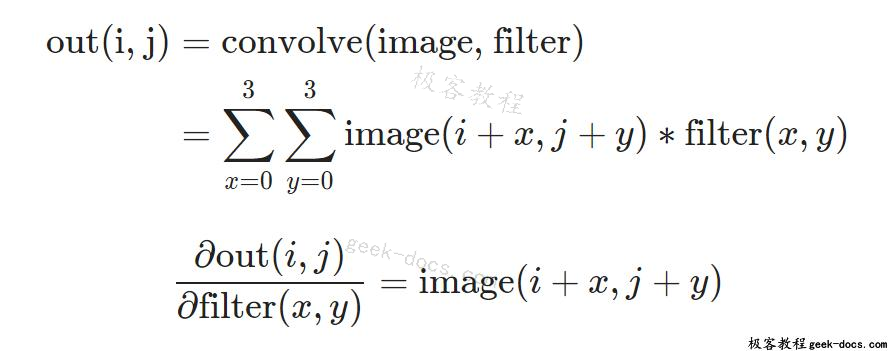

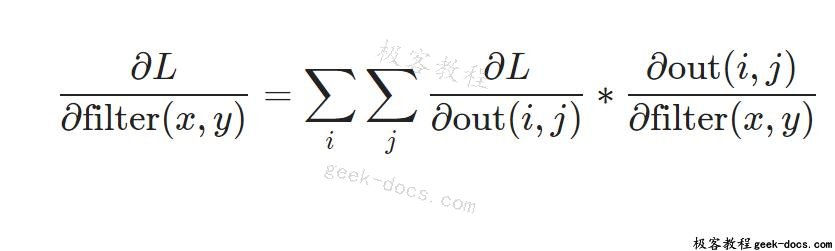

我们主要对conv层中的过滤器的损失梯度感兴趣,因为我们需要它来更新过滤器的权重。我们已经有了conv层的\frac{\partial L}{\partial out},所以我们只需要\frac{\partial out}{\partial filters}。为了计算它,我们问自己:改变过滤器的权重将如何影响conv层的输出?

事实上,改变任何滤波器的权值都会影响该滤波器的整个输出图像,因为每个输出像素在卷积过程中都会使用每个像素的权值。为了使这一点更容易考虑,让我们一次只考虑一个输出像素:修改过滤器将如何更改一个特定输出像素的输出?

这里有一个超级简单的例子来帮助思考这个问题:

一个3×3图像(左)与一个3×3滤波器(中)卷积生成一个1×1输出(右)

我们有一个3×3的图像与一个3×3的滤波器卷积得到一个1×1的输出。如果我们把中心过滤器的重量增加1呢?输出将增加中心图像值,80:

类似地,将任何其他过滤器权重增加1,将增加相应图像像素值的输出!这说明一个特定的输出像素相对于一个特定的滤波权重的导数就是对应的图像像素值。计算结果证实了这一点:

我们可以把它们放在一起,找到特定滤波器权重的损失梯度:

我们准备为conv层实现backprop !

conv.py

class Conv3x3

# ...

def backprop(self, d_L_d_out, learn_rate):

'''

Performs a backward pass of the conv layer.

- d_L_d_out is the loss gradient for this layer's outputs.

- learn_rate is a float.

'''

d_L_d_filters = np.zeros(self.filters.shape)

for im_region, i, j in self.iterate_regions(self.last_input):

for f in range(self.num_filters):

d_L_d_filters[f] += d_L_d_out[i, j, f] * im_region

# Update filters

self.filters -= learn_rate * d_L_d_filters

# We aren't returning anything here since we use Conv3x3 as

# the first layer in our CNN. Otherwise, we'd need to return

# the loss gradient for this layer's inputs, just like every

# other layer in our CNN.

return None

我们通过迭代每个图像区域/过滤器并逐步建立损失梯度来应用我们的导出方程。一旦我们覆盖了所有内容,我们就和以前一样,使用SGD更新self.filters 。请注意解释我们为什么不返回None的注释—-输入的损失梯度的推导与我们刚才做的非常相似,留给读者作为练习:)。

这样,我们就做完了!我们实现了通过CNN的完全反向传递。是时候测试一下了……

训练一个CNN

我们将对CNN进行几轮的训练,在训练期间跟踪它的进程,然后在一个单独的测试集中测试它。下面是完整的代码:

cnn.py

import mnist

import numpy as np

from conv import Conv3x3

from maxpool import MaxPool2

from softmax import Softmax

# We only use the first 1k examples of each set in the interest of time.

# Feel free to change this if you want.

train_images = mnist.train_images()[:1000]

train_labels = mnist.train_labels()[:1000]

test_images = mnist.test_images()[:1000]

test_labels = mnist.test_labels()[:1000]

conv = Conv3x3(8) # 28x28x1 -> 26x26x8

pool = MaxPool2() # 26x26x8 -> 13x13x8

softmax = Softmax(13 * 13 * 8, 10) # 13x13x8 -> 10

def forward(image, label):

'''

Completes a forward pass of the CNN and calculates the accuracy and

cross-entropy loss.

- image is a 2d numpy array

- label is a digit

'''

# We transform the image from [0, 255] to [-0.5, 0.5] to make it easier

# to work with. This is standard practice.

out = conv.forward((image / 255) - 0.5)

out = pool.forward(out)

out = softmax.forward(out)

# Calculate cross-entropy loss and accuracy. np.log() is the natural log.

loss = -np.log(out[label])

acc = 1 if np.argmax(out) == label else 0

return out, loss, acc

def train(im, label, lr=.005):

'''

Completes a full training step on the given image and label.

Returns the cross-entropy loss and accuracy.

- image is a 2d numpy array

- label is a digit

- lr is the learning rate

'''

# Forward

out, loss, acc = forward(im, label)

# Calculate initial gradient

gradient = np.zeros(10)

gradient[label] = -1 / out[label]

# Backprop

gradient = softmax.backprop(gradient, lr)

gradient = pool.backprop(gradient)

gradient = conv.backprop(gradient, lr)

return loss, acc

print('MNIST CNN initialized!')

# Train the CNN for 3 epochs

for epoch in range(3):

print('--- Epoch %d ---' % (epoch + 1))

# Shuffle the training data

permutation = np.random.permutation(len(train_images))

train_images = train_images[permutation]

train_labels = train_labels[permutation]

# Train!

loss = 0

num_correct = 0

for i, (im, label) in enumerate(zip(train_images, train_labels)):

if i > 0 and i % 100 == 99:

print(

'[Step %d] Past 100 steps: Average Loss %.3f | Accuracy: %d%%' %

(i + 1, loss / 100, num_correct)

)

loss = 0

num_correct = 0

l, acc = train(im, label)

loss += l

num_correct += acc

# Test the CNN

print('\n--- Testing the CNN ---')

loss = 0

num_correct = 0

for im, label in zip(test_images, test_labels):

_, l, acc = forward(im, label)

loss += l

num_correct += acc

num_tests = len(test_images)

print('Test Loss:', loss / num_tests)

print('Test Accuracy:', num_correct / num_tests)

运行代码的示例输出:

MNIST CNN initialized!

--- Epoch 1 ---

[Step 100] Past 100 steps: Average Loss 2.254 | Accuracy: 18%

[Step 200] Past 100 steps: Average Loss 2.167 | Accuracy: 30%

[Step 300] Past 100 steps: Average Loss 1.676 | Accuracy: 52%

[Step 400] Past 100 steps: Average Loss 1.212 | Accuracy: 63%

[Step 500] Past 100 steps: Average Loss 0.949 | Accuracy: 72%

[Step 600] Past 100 steps: Average Loss 0.848 | Accuracy: 74%

[Step 700] Past 100 steps: Average Loss 0.954 | Accuracy: 68%

[Step 800] Past 100 steps: Average Loss 0.671 | Accuracy: 81%

[Step 900] Past 100 steps: Average Loss 0.923 | Accuracy: 67%

[Step 1000] Past 100 steps: Average Loss 0.571 | Accuracy: 83%

--- Epoch 2 ---

[Step 100] Past 100 steps: Average Loss 0.447 | Accuracy: 89%

[Step 200] Past 100 steps: Average Loss 0.401 | Accuracy: 86%

[Step 300] Past 100 steps: Average Loss 0.608 | Accuracy: 81%

[Step 400] Past 100 steps: Average Loss 0.511 | Accuracy: 83%

[Step 500] Past 100 steps: Average Loss 0.584 | Accuracy: 89%

[Step 600] Past 100 steps: Average Loss 0.782 | Accuracy: 72%

[Step 700] Past 100 steps: Average Loss 0.397 | Accuracy: 84%

[Step 800] Past 100 steps: Average Loss 0.560 | Accuracy: 80%

[Step 900] Past 100 steps: Average Loss 0.356 | Accuracy: 92%

[Step 1000] Past 100 steps: Average Loss 0.576 | Accuracy: 85%

--- Epoch 3 ---

[Step 100] Past 100 steps: Average Loss 0.367 | Accuracy: 89%

[Step 200] Past 100 steps: Average Loss 0.370 | Accuracy: 89%

[Step 300] Past 100 steps: Average Loss 0.464 | Accuracy: 84%

[Step 400] Past 100 steps: Average Loss 0.254 | Accuracy: 95%

[Step 500] Past 100 steps: Average Loss 0.366 | Accuracy: 89%

[Step 600] Past 100 steps: Average Loss 0.493 | Accuracy: 89%

[Step 700] Past 100 steps: Average Loss 0.390 | Accuracy: 91%

[Step 800] Past 100 steps: Average Loss 0.459 | Accuracy: 87%

[Step 900] Past 100 steps: Average Loss 0.316 | Accuracy: 92%

[Step 1000] Past 100 steps: Average Loss 0.460 | Accuracy: 87%

--- Testing the CNN ---

Test Loss: 0.5979384893783474

Test Accuracy: 0.78

我们的代码可以工作!在仅仅3000个训练步骤中,我们从一个模型的2.3损失和10%的准确性到0.6损失和78%的准确性。

想自己尝试或修改这段代码吗?在浏览器中运行CNN。它也可以在Github上使用。

为了节省时间,我们仅在本例中使用了整个MNIST数据集的一个子集——我们的CNN实现并不是特别快。如果我们想训练一个真正的MNIST CNN,我们会使用像Keras这样的ML库。为了说明我们CNN的强大功能,我使用Keras来实现和训练我们刚刚从零开始构建的CNN:

cnn_keras.py

import numpy as np

import mnist

from keras.models import Sequential

from keras.layers import Conv2D, MaxPooling2D, Dense, Flatten

from keras.utils import to_categorical

from keras.optimizers import SGD

train_images = mnist.train_images()

train_labels = mnist.train_labels()

test_images = mnist.test_images()

test_labels = mnist.test_labels()

train_images = (train_images / 255) - 0.5

test_images = (test_images / 255) - 0.5

train_images = np.expand_dims(train_images, axis=3)

test_images = np.expand_dims(test_images, axis=3)

model = Sequential([

Conv2D(8, 3, input_shape=(28, 28, 1), use_bias=False),

MaxPooling2D(pool_size=2),

Flatten(),

Dense(10, activation='softmax'),

])

model.compile(SGD(lr=.005), loss='categorical_crossentropy', metrics=['accuracy'])

model.fit(

train_images,

to_categorical(train_labels),

batch_size=1,

epochs=3,

validation_data=(test_images, to_categorical(test_labels)),

)

在完整MNIST数据集(60k训练图像)上运行该代码,结果如下:

Epoch 1

loss: 0.2433 - acc: 0.9276 - val_loss: 0.1176 - val_acc: 0.9634

Epoch 2

loss: 0.1184 - acc: 0.9648 - val_loss: 0.0936 - val_acc: 0.9721

Epoch 3

loss: 0.0930 - acc: 0.9721 - val_loss: 0.0778 - val_acc: 0.9744

用这个简单的CNN我们可以达到97.4%的测试精度!有了更好的CNN架构,我们可以进一步改进——在这个官方的Keras MNIST CNN示例中,经过12轮后,它们的测试精度达到99.25%。这是非常准确的。

这篇文章的所有代码都可以在Github上找到。

现在该做什么?

在本文和卷积神经网络(CNN)简介两部分的系列文章中,我们对卷积神经网络进行了全面的介绍,包括它们是什么、如何工作、为什么有用以及如何训练它们。然而,这仅仅是个开始。你可以做的还有很多:

- 使用适当的ML库(如Tensorflow、Keras或PyTorch)来试验更大/更好的CNNs。

- 了解如何使用CNNs批处理规范化。

- 了解如何使用数据增强来改进图像训练集。

- 请阅读有关ImageNet项目及其著名的计算机视觉竞赛ImageNet大型视觉识别挑战(ILSVRC)。

极客教程

极客教程