Keras使得创建深度学习模型变得快速而简单, 虽然如此很多时候我们只要复制许多官网的范例就可做出很多令人觉得惊奇的结果。但是当要解决的问题需要进行一些模型的调整与优化或是需要构建出一个新论文的网络结构的时候, 我们就可能会左支右拙的难以招架。

在本教程中,您将通过阅读VGG的原始论文从零开始使用Keras来构建在ILSVRC-2014 (ImageNet competition)竞赛中获的第一名的VGG (Visual Geometry Group, University of Oxford)网络结构。

那么,重新构建别人已经构建的东西有什么意义呢?重点是学习。通过完成这次的练习,您将:

- 了解更多关于VGG的架构

- 了解有关卷积神经网络的更多信息

- 了解如何在Keras中实施某种网络结构

- 通过阅读论文并实施其中的某些部分可以了解更多底层的原理与原始构想

为什么从VGG开始?

- 它很容易实现

- 它在ILSVRC-2014(ImageNet竞赛)上取得了优异的成绩

- 它今天被广泛使用

- 它的论文简单易懂

- Keras己经实现VGG在散布的版本中,所以你可以用来参考与比较

让我们从论文中挖宝

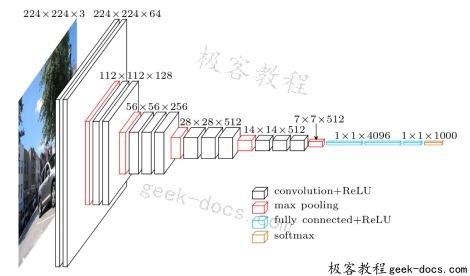

根据论文的测试给果D (VGG16)与E (VGG19)是效果最好的,由于这两种网络构建的方法与技巧几乎相同,因此我们选手构建D (VGG16)这个网络结构类型。

归纳一下论文网络构建讯息:

- 输入图像尺寸( input size):224 x 224

- 感受过泸器( receptive field)的大小是3 x 3

- 卷积步长( stride)是1个像素

- 填充( padding)是1(对于3 x 3的感受过泸器)

- 池化层的大小是2×2且步长( stride)为2像素

- 有两个完全连接层,每层4096个神经元

- 最后一层是具有1000个神经元的softmax分类层(代表1000个ImageNet类别)

- 激励函数是ReLU

#这个Jupyter Notebook的环境

import platform

import tensorflow

import keras

print ( "Platform: {} " . format ( platform . platform ()))

print ( "Tensorflow version: {} " . format ( tensorflow . __version__ ))

print ( " Keras version: {} " . format ( keras . __version__ ))

% matplotlib inline

import matplotlib.pyplot as plt

import matplotlib.image as mpimg

import numpy as np

from IPython.display import Image

Using TensorFlow backend.

Platform: Windows-7-6.1.7601-SP1

Tensorflow version: 1.4.0

Keras version: 2.1.1

创建模型(Sequential)

import keras

from keras.models import Sequential

from keras.layers import Dense , Activation , Dropout , Flatten

from keras.layers import Conv2D , MaxPool2D

from keras.utils import plot_model

#定义输入

input_shape = ( 224 , 224 , 3 ) # RGB影像224x224 (height, width, channel)

#使用'序贯模型(Sequential)来定义

model = Sequential ( name = 'vgg16-sequential' )

#第1个卷积区块(block1)

model . add ( Conv2D ( 64 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , input_shape = input_shape , name = 'block1_conv1' ))

model . add ( Conv2D ( 64 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block1_conv2' ))

model . add ( MaxPool2D (( 2 , 2 ), strides = ( 2 , 2 ), name = 'block1_pool' ))

#第2个卷积区块(block2)

model . add ( Conv2D ( 128 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block2_conv1' ))

model . add ( Conv2D ( 128 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block2_conv2' ))

model . add ( MaxPool2D (( 2 , 2 ), strides = ( 2 , 2 ), name = 'block2_pool' ))

#第3个卷积区块(block3)

model . add ( Conv2D ( 256 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block3_conv1' ))

model . add ( Conv2D ( 256 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block3_conv2' ))

model . add ( Conv2D ( 256 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block3_conv3' ))

model . add ( MaxPool2D (( 2 , 2 ), strides = ( 2 , 2 ), name = 'block3_pool' ))

#第4个卷积区块(block4)

model . add ( Conv2D ( 512 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block4_conv1' ))

model . add ( Conv2D ( 512 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block4_conv2' ))

model . add ( Conv2D ( 512 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block4_conv3' ))

model . add ( MaxPool2D (( 2 , 2 ), strides = ( 2 , 2 ), name = 'block4_pool' ))

#第5个卷积区块(block5)

model . add ( Conv2D ( 512 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block5_conv1' ))

model . add ( Conv2D ( 512 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block5_conv2' ))

model . add ( Conv2D ( 512 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block5_conv3' ))

model . add ( MaxPool2D (( 2 , 2 ), strides = ( 2 , 2 ), name = 'block5_pool' ))

#前馈全连接区块

model . add ( Flatten ( name = 'flatten' ))

model . add ( Dense ( 4096 , activation = 'relu' , name = 'fc1' ))

model . add ( Dense ( 4096 , activation = 'relu' , name = 'fc2' ))

model . add ( Dense ( 1000 , activation= 'softmax' , name = 'predictions' ))

#打印网络结构

model . summary ()

Layer (type) Output Shape Param #

================================================== ===============

block1_conv1 (Conv2D) (None, 224, 224, 64) 1792

block1_conv2 (Conv2D) (None, 224, 224, 64) 36928

block1_pool (MaxPooling2D) (None, 112, 112, 64) 0

block2_conv1 (Conv2D) (None, 112, 112, 128) 73856

block2_conv2 (Conv2D) (None, 112, 112, 128) 147584

block2_pool (MaxPooling2D) (None, 56, 56, 128) 0

block3_conv1 (Conv2D) (None, 56, 56, 256) 295168

block3_conv2 (Conv2D) (None, 56, 56, 256) 590080

block3_conv3 (Conv2D) (None, 56, 56, 256) 590080

block3_pool (MaxPooling2D) (None, 28, 28, 256) 0

block4_conv1 (Conv2D) (None, 28, 28, 512) 1180160

block4_conv2 (Conv2D) (None, 28, 28, 512) 2359808

block4_conv3 (Conv2D) (None, 28, 28, 512) 2359808

block4_pool (MaxPooling2D) (None, 14, 14, 512) 0

block5_conv1 (Conv2D) (None, 14, 14, 512) 2359808

block5_conv2 (Conv2D) (None, 14, 14, 512) 2359808

block5_conv3 (Conv2D) (None, 14, 14, 512) 2359808

block5_pool (MaxPooling2D) (None, 7, 7, 512) 0

flatten (Flatten) (None, 25088) 0

fc1 (Dense) (None, 4096) 102764544

fc2 (Dense) (None, 4096) 16781312

predictions (Dense) (None, 1000) 4097000

================================================== ===============

Total params: 138,357,544

Trainable params: 138,357,544

Non-trainable params: 0

确认模型训练的参数总数

根据论文2.3章节的讯息与我们模型的网络结构参数比对,我们构建的模型138,357,544参数的确符合论文提及的138百万的训练参数。

创建模型(Functaional API)

使用Keras的functiona api来定义网络结构。详细的说明与参考:

import keras

from keras.models import Model

from keras.layers import Input , Dense , Activation , Dropout , Flatten

from keras.layers import Conv2D , MaxPool2D

#定义输入

input_shape = ( 224 , 224 , 3 ) # RGB影像224x224 (height, width, channel)

#输入层

img_input = Input ( shape = input_shape , name = 'img_input' )

#第1个卷积区块(block1)

x = Conv2D ( 64 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block1_conv1' )( img_input )

x = Conv2D ( 64 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block1_conv2' )( x )

x = MaxPool2D (( 2 , 2 ), strides = ( 2 , 2 ), name = 'block1_pool' )( x )

#第2个卷积区块(block2)

x = Conv2D ( 128 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block2_conv1' )( x )

x = Conv2D ( 128 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block2_conv2' )( x )

x = MaxPool2D (( 2 , 2 ), strides = ( 2 , 2 ), name = 'block2_pool' )( x )

#第3个卷积区块(block3)

x = Conv2D ( 256 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block3_conv1' )( x )

x = Conv2D ( 256 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block3_conv2' )( x )

x = Conv2D ( 256 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block3_conv3' )( x )

x = MaxPool2D (( 2 , 2 ), strides = ( 2 , 2 ), name = 'block3_pool' )( x )

#第4个卷积区块(block4)

x = Conv2D ( 512 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block4_conv1' )( x )

x = Conv2D ( 512 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block4_conv2' )( x )

x = Conv2D ( 512 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block4_conv3' )( x )

x = MaxPool2D (( 2 , 2 ), strides = ( 2 , 2 ), name = 'block4_pool' )( x )

#第5个卷积区块(block5)

x = Conv2D ( 512 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block5_conv1' )( x )

x = Conv2D ( 512 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block5_conv2' )( x )

x = Conv2D ( 512 , ( 3 , 3 ), padding = 'same' , activation = 'relu' , name = 'block5_conv3' )( x )

x = MaxPool2D (( 2 , 2 ), strides = ( 2 , 2 ), name = 'block5_pool' )( x )

#前馈全连接区块

x = Flatten ( name = 'flatten' )( x )

x = Dense ( 4096 , activation = 'relu' , name = 'fc1' )( x )

x = Dense ( 4096 , activation = ' relu' , name = 'fc2' )( x )

x = Dense ( 1000 , activation = 'softmax' , name = 'predictions' )( x )

#产生模型

model2 = Model ( inputs = img_input , outputs = x , name = 'vgg16-funcapi' )

#打印网络结构

model2 . summary ()

Layer (type) Output Shape Param #

================================================== ===============

img_input (InputLayer) (None, 224, 224, 3) 0

block1_conv1 (Conv2D) (None, 224, 224, 64) 1792

block1_conv2 (Conv2D) (None, 224, 224, 64) 36928

block1_pool (MaxPooling2D) (None, 112, 112, 64) 0

block2_conv1 (Conv2D) (None, 112, 112, 128) 73856

block2_conv2 (Conv2D) (None, 112, 112, 128) 147584

block2_pool (MaxPooling2D) (None, 56, 56, 128) 0

block3_conv1 (Conv2D) (None, 56, 56, 256) 295168

block3_conv2 (Conv2D) (None, 56, 56, 256) 590080

block3_conv3 (Conv2D) (None, 56, 56, 256) 590080

block3_pool (MaxPooling2D) (None, 28, 28, 256) 0

block4_conv1 (Conv2D) (None, 28, 28, 512) 1180160

block4_conv2 (Conv2D) (None, 28, 28, 512) 2359808

block4_conv3 (Conv2D) (None, 28, 28, 512) 2359808

block4_pool (MaxPooling2D) (None, 14, 14, 512) 0

block5_conv1 (Conv2D) (None, 14, 14, 512) 2359808

block5_conv2 (Conv2D) (None, 14, 14, 512) 2359808

block5_conv3 (Conv2D) (None, 14, 14, 512) 2359808

block5_pool (MaxPooling2D) (None, 7, 7, 512) 0

flatten (Flatten) (None, 25088) 0

fc1 (Dense) (None, 4096) 102764544

fc2 (Dense) (None, 4096) 16781312

predictions (Dense) (None, 1000) 4097000

================================================== ===============

Total params: 138,357,544

Trainable params: 138,357,544

Non-trainable params: 0

模型训练

要用ImageNet的资料来训练VGG16的模型则不是一件容易的事喔。

VGG论文指出:

On a system equipped with four NVIDIA Titan Black GPUs, training a single net took 2–3 weeks depending on the architecture.

也就是说就算你有四张NVIDIA的Titan网卡用Imagenet的影像集来训练VGG16模型, 可能也得花个2-3星期。即使买的起这样的硬体,你也得花蛮多的时间来训练这个模型。

幸运的是Keras不仅己经在它的模组中包括了VGG16与VGG19的模型定义以外, 同时也帮大家预训练好了VGG16与VGG19的模型权重。

总结(Conclusion)

在这篇文章中有一些个人学习到的一些有趣的重点:

- 在Keras中要建构一个网络不难, 但了解这个网络架构的原理则需要多一点耐心

- VGG16构建简单效能高,真是神奇!

- VGG16在卷积层的设计是愈后面feature map的size愈小, 而过泸器(receptive field/fiter/kernel)则愈多

极客教程

极客教程